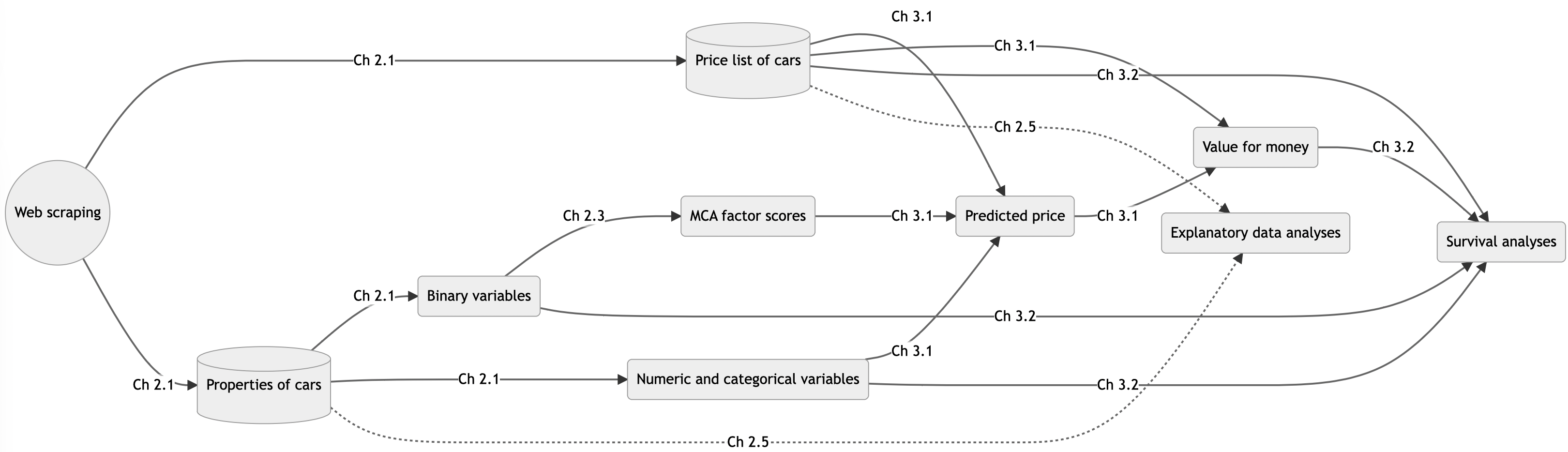

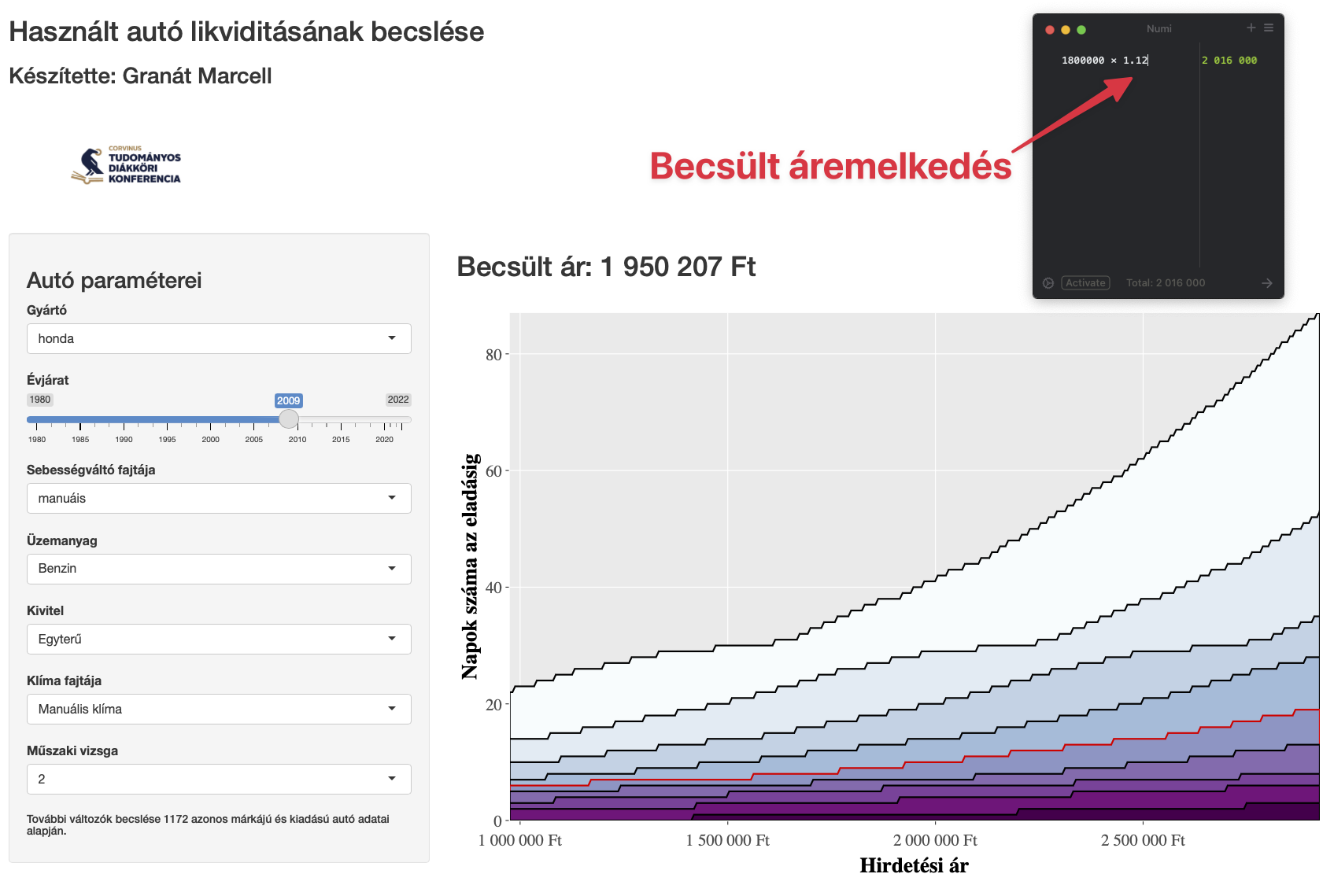

class: center, middle, title-slide .title[ # Liquidity in the Used Car Market ] .subtitle[ ## <img src="mnb_intezet.png" style="width:30.0%" /> <img src="nje-logo.jpg" style="width:25.0%" /> An Application of Survival Analysis ] .author[ ### Marcell Granát ] .institute[ ### MNB Insititute & .blue[John von Neumann University] ] .date[ ### 2022 ] --- class: inverse, center, middle # You don't dare to buy a car based on your models! #### An unnamed fellow student --- <style type="text/css"> .red { color: red; } .blue { color: #378C95; } strong { color: red; } a { color: #378C95; font-weight: bold; } .remark-inline-code { font-weight: 900; background-color: #a7d5e7; } .caption { color: #378C95; font-style: italic; text-align: center; } .content-box { box-sizing: content-box; background-color: #378C95; /* Total width: 160px + (2 * 20px) + (2 * 8px) = 216px Total height: 80px + (2 * 20px) + (2 * 8px) = 136px Content box width: 160px Content box height: 80px */ } .content-box-green { background-color: #d9edc2; } .content-box-red { background-color: #f9dbdb; } .fullprice { text-decoration: line-through; } </style> ## Motivation (as a journal paper) - Car is one of the most expensive consumer durables on a household's bill - Presumably the most important consideration is the price-to-value ratio - Commonly sold on the used car market after use -- - **How long it will take to sell?** -- #### DAG <img src="dag.png" width="700px" height="180px" style="display: block; margin: auto;" /> --- ## Source of data - Daily scraped from the website [www.hasznaltauto.hu](https://www.hasznaltauto.hu) - R codes are available: [https://marcellgranat.github.io/Data-Analysis/seminar5.html]() - **All cars available** that day were collected, as well as all available information about the vehicles <img src="hasznaltauto_list.png" width="700px" height="500px" style="display: block; margin: auto;" /> --- ## Source of data #### Daily scrape - Platform dependent - Windows: https://www.seancarney.ca/2020/10/11/scheduling-r-scripts-to-run-automatically-in-windows/ - Mac: Automator --- ## Source of data #### Daily scrape - Platform dependent - Windows: https://www.seancarney.ca/2020/10/11/scheduling-r-scripts-to-run-automatically-in-windows/ - Mac: Automator <img src="laptop.JPG" width="360px" height="270px" style="display: block; margin: auto;" /> --- ## Source of data #### Daily scrape <img src="analysis-in-practice_files/figure-html/unnamed-chunk-7-1.png" width="700px" height="450px" style="display: block; margin: auto;" /> --- class: inverse, center, middle # The data --- ## The data ## Detailed information about the cars ```r cars_data ``` ``` ## # A tibble: 613,614 × 24 ## id brand evjarat allapot kivitel kilometerora_al… szallithato_sze… ## <chr> <fct> <int> <fct> <fct> <int> <int> ## 1 15650800 bmw 2019 Újszerű Sedan 5 5 ## 2 15988457 peugeot 2020 Kitűnő Ferdehátú 5000 5 ## 3 16062262 peugeot 2020 Kitűnő Városi te… 1 5 ## 4 16635025 opel 2021 Kitűnő Városi te… 2 5 ## 5 16636084 opel 2021 Kitűnő Városi te… 3 5 ## 6 16696088 opel 2021 Kitűnő Kombi 5 9 ## 7 16742853 peugeot 2021 Kitűnő Városi te… 4 5 ## 8 16776038 opel 2021 Kitűnő Egyterű 2 7 ## 9 16814133 peugeot 2021 Kitűnő Egyterű 4 7 ## 10 16844090 suzuki 2021 Normál Városi te… 1 5 ## # … with 613,604 more rows, and 17 more variables: ajtok_szama <fct>, ## # klima_fajtaja <fct>, uzemanyag <fct>, hengerurtartalom <int>, ## # teljesitmeny <int>, henger_elrendezes <fct>, hajtas <fct>, ## # sebessegvalto_fajtaja <fct>, muszaki_vizsga_ervenyes <ord>, ## # nyari_gumi_meret <int>, szin <fct>, sajat_tomeg <int>, teljes_tomeg <int>, ## # csomagtarto <int>, nyari_gumi_meret2 <int>, nyari_gumi_meret3 <int>, ## # sebessegvalto_fokozatszam <int> ``` --- ## The data ## Detailed information about the cars .pull-left[ <img src="example_car.png" width="700px" height="450px" style="display: block; margin: auto;" /> ] .pull-right[ **General characteristics**: available for all cars **Accessories**: handled as binary (whether the car has or not) ] --- ## The Data #### Prices .pull-left[ ```r prices_df ``` ``` ## # A tibble: 12,409,519 × 3 ## id price date ## <chr> <dbl> <date> ## 1 15650800 14970000 2021-05-22 ## 2 15650800 14970000 2021-05-22 ## 3 15988457 10800000 2021-05-22 ## 4 15988457 10800000 2021-05-22 ## 5 16062262 8299000 2021-05-22 ## 6 16062262 8299000 2021-05-22 ## 7 16635025 5299000 2021-05-22 ## 8 16635025 5299000 2021-05-22 ## 9 16636084 7789000 2021-05-22 ## 10 16636084 7789000 2021-05-22 ## # … with 12,409,509 more rows ``` ] -- .pull-right[ ##### Assumption .content-box-red[ If a car is available on the website one day but not the next, it has been sold at the last listed price. ] ] --- ## The Data #### Handling missing data <img src="analysis-in-practice_files/figure-html/cars_visdat-1.png" width="700px" height="450px" style="display: block; margin: auto;" /> --- ## The Data #### Handling missing data - Size of the data is too large to use MICE - Imputation based on **KNN** using only the presumably relevant variables ```r knn_impute <- function(.data, formula, ...) { outcome <- gsub("~.*", "", formula) %>% str_trim() predictors <- gsub(".* ~", "", formula) %>% str_split("\\+") %>% reduce(c) %>% str_trim() recipe(.data) %>% step_normalize(all_numeric(), -outcome) %>% * step_impute_knn(outcome, impute_with = predictors, ...) %>% prep(retain = TRUE) %>% juice() %>% select(outcome) } ``` --- ## The Data #### Handling missing data .pull-left[ <img src="knn_wiki.svg" width="700px" height="450px" style="display: block; margin: auto;" /> ] .pull-right[ > Example of k-NN classification. The test sample (green dot) should be classified either to blue squares or to red triangles. If k = 3 (solid line circle) it is assigned to the red triangles because there are 2 triangles and only 1 square inside the inner circle. If k = 5 (dashed line circle) it is assigned to the blue squares (3 squares vs. 2 triangles inside the outer circle). Source: [Wikipedia](https://en.wikipedia.org/wiki/K-nearest_neighbors_algorithm) ] --- ## The data #### Handling missing data |Imputed variable |Predictors | |:-----------------------------|:----------------------------------------------------------------------------------------------| |Cylinder capacity |Brand, year of manufacture, number of persons transported | |Cylinder layout |Brand, year of manufacture, cylinder capacity, engine performance | |Number of doors |Brand, year of manufacture, own weight, number of persons transported | |Number of persons transported |Brand, year of manufacture, own weight | |Own weight |Brand, year of manufacture, engine performance, number of persons transported, number of doors | |Tire size 1 |Brand, year of manufacture, own weight | |Tire size 2 |Brand, year of manufacture, own weight | |Tire size 3 |Brand, year of manufacture, own weight | |Total weight |Brand, year of manufacture, engine performance, number of persons transported, number of doors | |Trunk |Brand, year of manufacture, number of persons transported, own weight, total weight | |Type of fuel |Brand, year of manufacture, engine performance, cylinder capacity | |Wheel drive |Brand, year of manufacture, engine performance, type of fuel | --- ## The data #### Cleaning ```r szin = case_when( str_detect(szin, "kék") ~ "kek", str_detect(szin, "ibolya") ~ "kek", str_detect(szin, "türkiz") ~ "kek", str_detect(szin, "piros") ~ "piros", str_detect(szin, "vörös") ~ "piros", str_detect(szin, "bordó") ~ "piros", str_detect(szin, "fekete") ~ "fekete", str_detect(szin, "szürke") ~ "szurke", str_detect(szin, "ezüst") ~ "szurke", str_detect(szin, "fehér") ~ "feher", str_detect(szin, "barna") ~ "barna", str_detect(szin, "homok") ~ "barna", str_detect(szin, "pezsgő") ~ "barna", str_detect(szin, "bézs") ~ "barna", str_detect(szin, "vaj") ~ "barna", str_detect(szin, "zöld") ~ "zold", str_detect(szin, "sárga") ~ "sarga", str_detect(szin, "narancs") ~ "sarga", str_detect(szin, "lila") ~ "lila", * TRUE ~ "other"), ``` --- ## The data #### Cleaning ```r filter(!is.na(kilometerora_allasa) & !is.na(evjarat)) %>% # evjarat ~ 1000 cars filter(kilometerora_allasa > 0) ``` --- ## The data #### Cleaning ```r price_change_df <- prices_df %>% group_by(id) %>% summarise( min_price = min(price, na.rm = TRUE), max_price = max(price, na.rm = TRUE), * price_change = max_price / min_price ) combined_df %>% anti_join( price_change_df %>% * filter(price_change > 5) %>% # unrealistic jump in price ~ 100 cars select(id) ) %>% * filter(price <= 3e7 & price >= 3e5 & !is.na(fct_1)) %>% # unrealistic prices ~ 15 000 cars na.omit() ``` --- ## The data #### Dimension reduction - Handling the "accesories" type data as binary resulted 235 dummy variables. - Some of the models we applied in this study use one-hot coding (470 0/1 columns) 😱 -- - Appropriate methodology for binary data: **MCA** --- ## The data #### Dimension reduction ```r training_df <- factor_df %>% group_by_at(setdiff(names(additional_factor_df), "id")) %>% sample_frac(., size=.05) %>% ungroup() %>% na.omit() %>% select(where(~ n_distinct(.) > 1), -id) %>% mutate_all(as.character) %>% mutate_all(as.factor) n_factor <- training_df %>% FactoMineR::MCA(graph = FALSE) %>% # can calculate n_factor only from {FactoMineR} factoextra::get_eigenvalue() %>% data.frame() %>% * filter(eigenvalue > mean(eigenvalue)) %>% nrow() fit_mca <- training_df %>% * MASS::mca(nf = n_factor) # can predict only w {MASS} ``` --- ## The data #### Dimension reduction ```r mca_factor_df <- factor_df %>% select(names(training_df)) %>% mutate_all(as.character) %>% mutate_all(as.factor) %>% group_by(cut(row_number(), 100)) %>% # split the df to 100 pieces to avoid memory overload group_map(~.) %>% * map(~ data.frame(predict(object = fit_mca, newdata = .))) ``` -- The outcome: **236** numeric variables --- class: middle, center ### The mainsteps of the study  --- ## Determining the market price -- - Value of each vehicle is different -- - Focus of this study is not exploring the causal effects that influence the sale price, but the **accuracy** of the estimate -- - Testing several ML methods in a **stratified k-fold cross-validation** (9,000/1,000; before July) framework -- - Comparing models with the best hyperparamter set on a testing set (10,000; before July) ```r training_df %>% sample_n(1e4) %>% vfold_cv(strata = price, v = 10) ``` --- ## Determining the market price #### Descriptive statistics of offer prices in HUF |Quarter | Mean | Median | Standard deviation | Skeness | Kurtosis | |:---------------|:---------:|:---------:|:------------------:|:--------:|:--------:| |2021/04-2021/07 | 3,710,100 | 1,949,000 | 4,821,653 | 2.795249 | 11.63271 | |2021/07-2021/10 | 3,774,025 | 1,950,000 | 4,883,133 | 2.731550 | 11.19031 | |2021/10-2022/01 | 3,904,783 | 1,990,000 | 5,057,759 | 2.626280 | 10.32879 | |2022/01-2022/04 | 4,068,776 | 2,200,000 | 4,873,486 | 2.524668 | 10.10797 | |Total | 3,871,298 | 1,999,000 | 4,931,980 | 2.659425 | 10.71338 | *Only the cars used in the modeling were taken into account in the calculation, excluding outliers.* --- ## Determining the market price <img src="recipes.png" width="300px" height="300px" style="display: block; margin: auto;" /> ```r recipe(price ~ ., data = training_df) %>% * step_log(all_outcomes()) %>% step_rm(id, date) %>% * step_normalize(all_numeric()) ``` --- ## Determining the market price #### Number of hyperparameters, combination of tested parameters, and total runtime of hyperparameter tuning |Model | # of hyperparameters | # of parameter combinations | Runtime (seconds) | |:----------------------------------|:--------------------:|:---------------------------:|:-----------------:| |EXtreme Gradient Boosting Training | 7 | 128 | 66,280 | |Ordinary Least Squares | 0 | 1 | 4 | |Single-hidden-layer neural network | 3 | 46 | 37,353 | |K-Nearest Neighbor | 3 | 32 | 213,280 | |Random Forest | 2 | 32 | 60,923 | |Linear support vector machines | 3 | 128 | 184,857 | |Regression tree | 3 | 64 | 814 | --- ## Determining the market price #### Stratified k-fold cross-validation R-squared by **OLS** | fold | rsq | |:------:|:---------:| | Fold01 | 0.9179197 | | Fold02 | 0.9066590 | | Fold03 | 0.9118202 | | Fold04 | 0.9032560 | | Fold05 | 0.8824825 | | Fold06 | 0.8839070 | | Fold07 | 0.9053443 | | Fold08 | 0.9062825 | | Fold09 | 0.9170148 | | Fold10 | 0.9120721 | --- ## Determining the market price <img src="analysis-in-practice_files/figure-html/unnamed-chunk-26-1.png" width="700px" height="450px" style="display: block; margin: auto;" /> --- ## Determining the market price <img src="analysis-in-practice_files/figure-html/unnamed-chunk-27-1.png" width="700px" height="450px" style="display: block; margin: auto;" /> --- ## Determining the market price <img src="analysis-in-practice_files/figure-html/unnamed-chunk-28-1.png" width="700px" height="450px" style="display: block; margin: auto;" /> --- ## Determining the market price <img src="analysis-in-practice_files/figure-html/unnamed-chunk-29-1.png" width="700px" height="450px" style="display: block; margin: auto;" /> --- ## Determining the market price <img src="analysis-in-practice_files/figure-html/unnamed-chunk-30-1.png" width="700px" height="450px" style="display: block; margin: auto;" /> --- ## Determining the market price <img src="analysis-in-practice_files/figure-html/unnamed-chunk-31-1.png" width="700px" height="450px" style="display: block; margin: auto;" /> --- ## Determining the market price #### Comparing the estimation accuracy of the investigated models <img src="analysis-in-practice_files/figure-html/unnamed-chunk-32-1.png" width="700px" height="450px" style="display: block; margin: auto;" /> --- ## Determining the market price #### Descriptive statistics of the estimated price-to-value |Quarter | Min (%) | Lower quartile (%) | Median (%) | Higher quartile (%) | Max (%) | Standard deviation (%p) | |:---------------|:-------:|:------------------:|:----------:|:-------------------:|:-------:|:-----------------------:| |2021/04-2021/07 | -48.13% | -2.83% | -0.08% | 2.66% | 61.95% | 5.47% | |2021/07-2021/10 | -51.17% | -2.69% | 0.06% | 2.87% | 208.45% | 5.71% | |2021/10-2022/01 | -50.43% | -2.75% | 0.07% | 2.96% | 198.91% | 5.87% | |2022/01-2022/04 | -47.48% | -2.63% | 0.10% | 2.89% | 124.62% | 5.63% | |Total | -51.17% | -2.72% | 0.06% | 2.89% | 208.45% | 5.74% | *Price-to-value is defined as the proportion of offer price and the predicted price from XGBoost. To report in this table we subtracted 1 from the values.* --- class: inverse, middle, center # Survival Analysis --- ## Survival Analysis #### Survival curve showing the probability of not having disappeared from the supply <img src="analysis-in-practice_files/figure-html/unnamed-chunk-34-1.png" width="700px" height="450px" style="display: block; margin: auto;" /> --- ## Survival Analysis Survival function: `$$G(t) = P(T \ge t)$$` The hazard rate (h) (intensity process: `$$h(t)=\lim _{\Delta \searrow 0} \frac{\mathbb{P}(T<t+\Delta \mid T \geq t)}{\Delta}=\frac{f(t)}{G(t)}$$` Proportional effect on the intensity process: `$$h_{i}(t)=c_{i} h_{0}(t),$$` Regression: `$$\ln c_{i}=\sum_{j=1}^{p} \beta_{j} x_{i j},$$` where `\(i\)` refers to individual cars and `\(j\)` refers to explanatory variables. --- ## Survival analysis - Fitting a model with 250 explanatory variables would be inappropriate for interpretation -- `$$\text{LASSO}=\text{GLM} + \lambda \sum_{j=1}^p{|\beta_j|}$$` -- - The lambda that leads to the model having the lowest number of explanatory variables with **adequate accuracy** (within 1 standard error of the best model) is used for feature selection -- - **Harell C index**: proportion of concordant pairs in the total concordant and discordant (when the car estimated with the higher intensity is sold later) pairs --- ## Survival analysis - Fitting a model with 250 explanatory variables would be inappropriate for interpretation `$$\text{LASSO}=\text{GLM} + \lambda \sum_{j=1}^p{|\beta_j|}$$` - The lambda that leads to the model having the lowest number of explanatory variables with **adequate accuracy** (within 1 standard error of the best model) is used for feature selection - **Harell C index**: proportion of concordant pairs in the total concordant and discordant (when the car estimated with the higher intensity is sold later) pairs - LASSO-based model introduces bias by shrinking the coefficients of all variables towards zero, and does not provide information on the significance of the variable -- - Thus an unregularized with the selected variables is used for interpretation --- ## Survival analysis #### K-fold cross-validation for finding the optimal lambda hyperparameter <img src="analysis-in-practice_files/figure-html/unnamed-chunk-35-1.png" width="700px" height="450px" style="display: block; margin: auto;" /> --- ## Survival analysis #### Estimated coefficients of the Cox models <img src="analysis-in-practice_files/figure-html/unnamed-chunk-36-1.png" width="700px" height="450px" style="display: block; margin: auto;" /> --- ## Survival analysis #### Estimated coefficients of the Cox models <img src="analysis-in-practice_files/figure-html/unnamed-chunk-37-1.png" width="700px" height="450px" style="display: block; margin: auto;" /> --- ## Survival analysis #### Survival model's response to the change of price-to-value <img src="analysis-in-practice_files/figure-html/unnamed-chunk-38-1.png" width="700px" height="350px" style="display: block; margin: auto;" /> *To capture the partial effect of price-to-value assuming that all other variables are unchanged, numeric predictors are fixed at their medians, while categorical and logical variables are fixed at their modes. The monotone increasing red line means that if the price-to-value is lower (the ratio of the offer price to the predicted price is higher), then the median selling time is higher.* --- ## Survival analysis #### Example with a valid car  --- class: center, middle # Thank you for your attention! Slides are available at [www.marcellgranat.com](https://www.marcellgranat.com) --- # References <p><cite>Hungarian Central Statistical Office (2020). <em>Statistical Yearbook of Hungary, 2019</em>. Budapest: Hungarian Central Statistical Office.</cite></p> <p><cite>Hungarian Central Statistical Office (2022). <em>Consumer Price Indices by COICOP classification</em>. Accessed on 03.04.2022. URL: <a href="https://www.ksh.hu/stadat_files/ara/en/ara0005.html">https://www.ksh.hu/stadat_files/ara/en/ara0005.html</a>.</cite></p> <p><cite>Liao, S., Y. Lin, Y. Lin, et al. (2014). “Missing value imputation in high-dimensional phenomic data: imputable or not, and how?” In: <em>BMC Bioinformatics</em> 15.1, pp. 346–346. DOI: <a href="https://doi.org/10.1186/s12859-014-0346-6">10.1186/s12859-014-0346-6</a>.</cite></p> <p><cite>Sharma, N. and K. Saroha (2015). “Study of dimension reduction methodologies in data mining”. In: <em>International Conference on Computing, Communication & Automation</em>. Greater Noida, India: IEEE, pp. 133–137. ISBN: 978-1-4799-8890-7. DOI: <a href="https://doi.org/10.1109/CCAA.2015.7148359">10.1109/CCAA.2015.7148359</a>. URL: <a href="http://ieeexplore.ieee.org/document/7148359/">http://ieeexplore.ieee.org/document/7148359/</a> (visited on Apr. 07, 2022).</cite></p> --- # References 2 <p><cite>Abdi, H. and D. Valentin (2007). “Multiple correspondence analysis”. In: <em>Encyclopedia of measurement and statistics</em> 2.4, pp. 651–657.</cite></p> <p><cite>Venables, W. N. and B. D. Ripley (2013). <em>Modern applied statistics with S-PLUS</em>. Berlin: Springer Science & Business Media.</cite></p> <p><cite>Kaiser, H. F. (1960). “The application of electronic computers to factor analysis”. In: <em>Educational and Psychological Measurement</em> 20.1, pp. 141–151.</cite></p> <p><cite>Kuhn, M. and K. Johnson (2013). “Over-fitting and model tuning”. In: <em>Applied predictive modeling</em>. Berlin: Springer, pp. 61–92.</cite></p> <p><cite>Cox, D. R. (1972). “Regression models and life-tables”. In: <em>Journal of the Royal Statistical Society: Series B (Methodological)</em> 34.2, pp. 187–202.</cite></p> --- # References 3 <p><cite>Kaplan, E. L. and P. Meier (1958). “Nonparametric estimation from incomplete observations”. In: <em>Journal of the American Statistical Association</em> 53.282, pp. 457–481.</cite></p> <p><cite>Nguyen-Sy, T., J. Wakim, Q. To, et al. (2020). “Predicting the compressive strength of concrete from its compositions and age using the extreme gradient boosting method”. In: <em>Construction and Building Materials</em> 260, p. 119757.</cite></p> <p><cite>Sheridan, R. P., W. M. Wang, A. Liaw, et al. (2016). “Extreme gradient boosting as a method for quantitative structure–activity relationships”. In: <em>Journal of Chemical Information and Modeling</em> 56.12, pp. 2353–2360.</cite></p> <p><cite>Simon, N., J. Friedman, T. Hastie, et al. (2011). “Regularization Paths for Cox's Proportional Hazards Model via Coordinate Descent”. In: <em>Journal of Statistical Software</em> 39.5, pp. 1–13. URL: <a href="https://www.jstatsoft.org/v39/i05/">https://www.jstatsoft.org/v39/i05/</a>.</cite></p> <p><cite>Goel, M. K., P. Khanna, and J. Kishore (2010). “Understanding survival analysis: Kaplan-Meier estimate”. In: <em>International journal of Ayurveda research</em> 1.4, p. 274.</cite></p> --- # References 4 <p><cite>Grambsch, P. M. and T. M. Therneau (1994). “Proportional hazards tests and diagnostics based on weighted residuals”. In: <em>Biometrika</em> 81.3, pp. 515–526.</cite></p> <p><cite>Schmid, M., M. N. Wright, and A. Ziegler (2016). “On the use of Harrell’s C for clinical risk prediction via random survival forests”. In: <em>Expert Systems with Applications</em> 63, pp. 450–459.</cite></p> <p><cite>Gareth, J., W. Daniela, H. Trevor, et al. (2013). <em>An introduction to statistical learning: with applications in R</em>. Berlin: Spinger.</cite></p> <p><cite>Zhang, Z., J. Reinikainen, K. A. Adeleke, et al. (2018). “Time-varying covariates and coefficients in Cox regression models”. In: <em>Annals of Translational Medicine</em> 6.7.</cite></p> <p><cite>Fisher, L. D. and in, Danyu Y (1999). “Time-dependent covariates in the Cox proportional-hazards regression model”. In: <em>Annual Review of Public Health</em> 20.1, pp. 145–157.</cite></p> --- # References 5 <p><cite>Pangallo, M. and M. Loberto (2018). “Home is where the ad is: online interest proxies housing demand”. In: <em>EPJ Data science</em> 7.1, p. 47.</cite></p> <p><cite>Jahani, E., P. Sundsøy, J. Bjelland, et al. (2017). “Improving official statistics in emerging markets using machine learning and mobile phone data”. In: <em>EPJ Data Science</em> 6.1, p. 3.</cite></p>